A major part of all future AI applications is building networks that are capable of learning from some dataset and then generating original content. This idea has been applied to Natural Language Processing (NLP) and that is how the AI community developed something called Language Models

The premise of a Language Model is to learn how sentences are built in some body of text and use that knowledge to generate new content

In my case, I wanted to try out Rap Generation as a fun side project to see if I can recreate lyrics of a popular Canadian rapper Drake (a.k.a. #6god)

I also want to share a general Machine Learning project pipeline, as I found that building something of your own is often very difficult if you don’t exactly know where to start.

1. Getting the Data

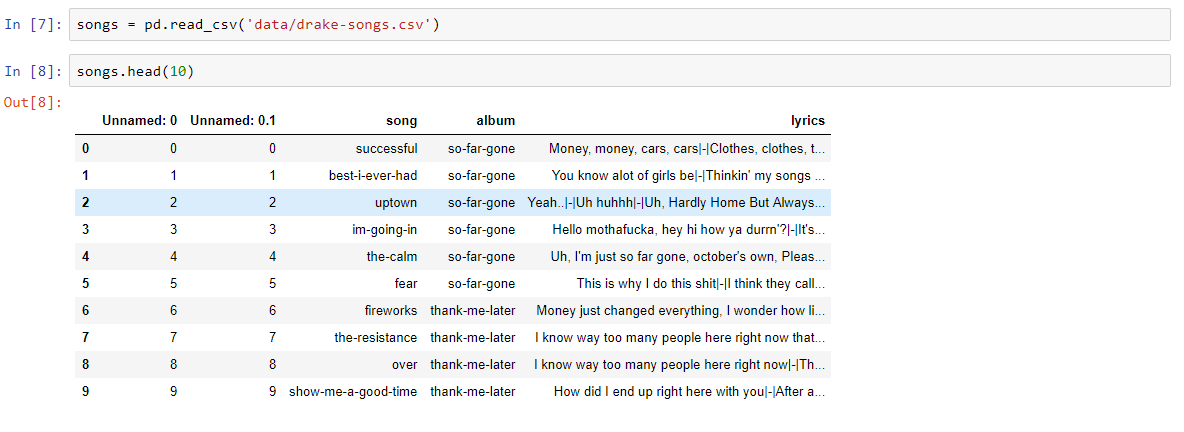

It all started off with looking for a dataset of all of Drake’s songs, I didn’t want to waste too much time, so I just built a quick script myself that scrapes web pages of a popular website called metrolyrics.com

import urllib.request as urllib2

from bs4 import BeautifulSoup

import pandas as pd

import re

from unidecode import unidecode

quote_page = 'http://metrolyrics.com/{}-lyrics-drake.html'

filename = 'drake-songs.csv'

songs = pd.read_csv(filename)

for index, row in songs.iterrows():

page = urllib2.urlopen(quote_page.format(row['song']))

soup = BeautifulSoup(page, 'html.parser')

verses = soup.find_all('p', attrs={'class': 'verse'})

lyrics = ''

for verse in verses:

text = verse.text.strip()

text = re.sub(r"\[.*\]\n", "", unidecode(text))

if lyrics == '':

lyrics = lyrics + text.replace('\n', '|-|')

else:

lyrics = lyrics + '|-|' + text.replace('\n', '|-|')

songs.at[index, 'lyrics'] = lyrics

print('saving {}'.format(row['song']))

songs.head()

print('writing to .csv')

songs.to_csv(filename, sep=',', encoding='utf-8')

I used a well known Python package BeautifulSoup to scrape the pages, which I picked up in about 5 minutes from this awesome tutorial by Justin Yek. As a note, I actually predefined what songs I want to acquire form metrolyrics, that’s why you might notice that I’m iterating over my songs data frame in the code above.

After running the scrapper, I had all of my lyrics in proper formatted .csv file and was ready to start preprocessing data and building the model.

2. Different Approaches

Now, we are going to talk about the model for text generation, this is really what you are here for, it’s the real sauce - raw sauce. I’m going to start off by talking about the model design and some important elements that make lyric generation possible and then, we are going to jump into the implementation of it.

There are two main approaches to building Language Models: (1) Character-level Models and (2) Word-level models.

The main difference for each one of the models comes from what your inputs and outputs are, and I’m going to talk exactly about how each one of them works here.

Character Level model

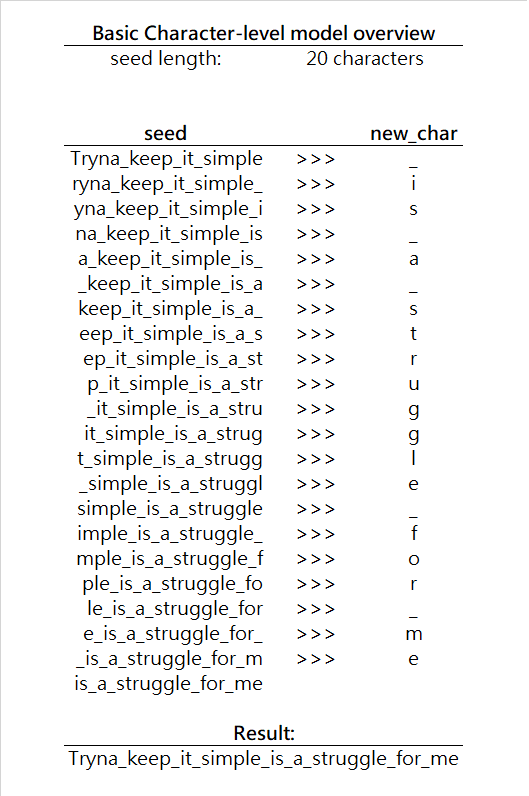

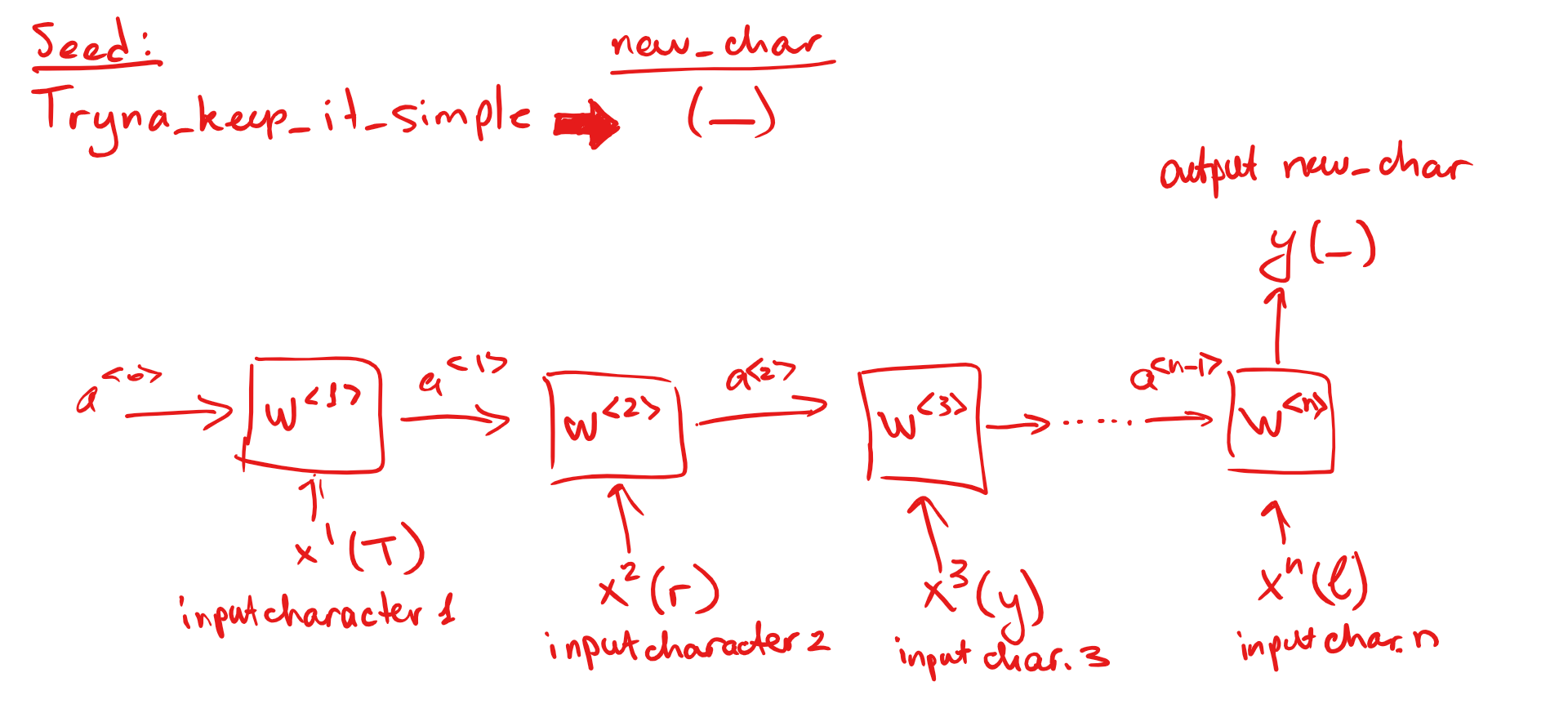

In the case of a character-level model your input is a series of characters seedand your model is responsible for predicting the next character new_char. Then you use the seed + new_char together to generate the next character and so on. Note, since your network input must always be of the same shape, we are actually going to lose one character from the seed on every iteration of this process. Here is a simple visualization:

At every iteration, the model is basically making a prediction what is the next most likely character given the seed characters or using conditional probability, this can be described as finding the maximum P(new_char|seed) , where new_char is any character from the alphabet. In our case, the alphabet is a set of all English letters and a space character. (Note, your alphabet can be very different and can contain any characters that you want, depends on the language that you are building the model for).

Word Level Model

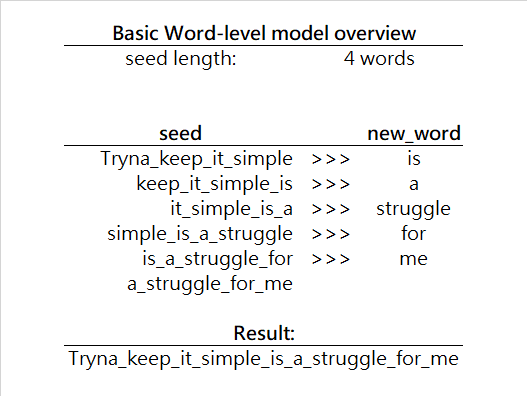

Word level model is almost the same as the character one, but it generates the next word instead of the next character. Here is a simple example:

Now, in this model, we are looking ahead by one unit, but this time our unit is a word, not a character. So, we are looking for P(new_word|seed) , where new_word is any word from our vocabulary.

Notice, that now we are searching through a much larger set than before. With alphabet, we searched through approximately 30 items, now we are searching through many more items at every iteration, hence the word-level algorithm is slower on every iteration, but since we are generating a whole word instead of a single character, it is actually not that bad at all. As a final note on our Word-level model, we can have a very diverse vocabulary and we usually develop it by finding all unique words from our dataset (usually done in data preprocessing stage). Since vocabularies can get infinitely large, there are many techniques that improve the efficiency of the algorithm, such as Word-Embeddings, but that is for a later article.

For the purposes of this article, I’m going to focus on the character level model because it is simpler in its implementation and understanding of Character-level model can be easily transferred to a more complex Word-level model later.

3. Data Preprocessing

For the Character-level model, we are going to have to preprocess the data in the following ways:

-

Tokenize the dataset — When we are feeding the inputs into the model, we don’t want to be feeding in just strings, we want to be working with characters instead since this is a Character-level model. So we are going to split all lines of lyrics into lists of characters.

-

Define the alphabet —Now, that we know every single kind of character that might appear in the lyrics (from previous tokenization step), we want to find all of the unique characters. For the sake of simplicity and the fact that the entire dataset is not that large (I’m only using 140 songs), I’m going to stick to English alphabet and also a couple special characters (like spaces) and will ignore all numbers and other stuff (Since the dataset is small, I’d rather have my model predict fewer characters).

-

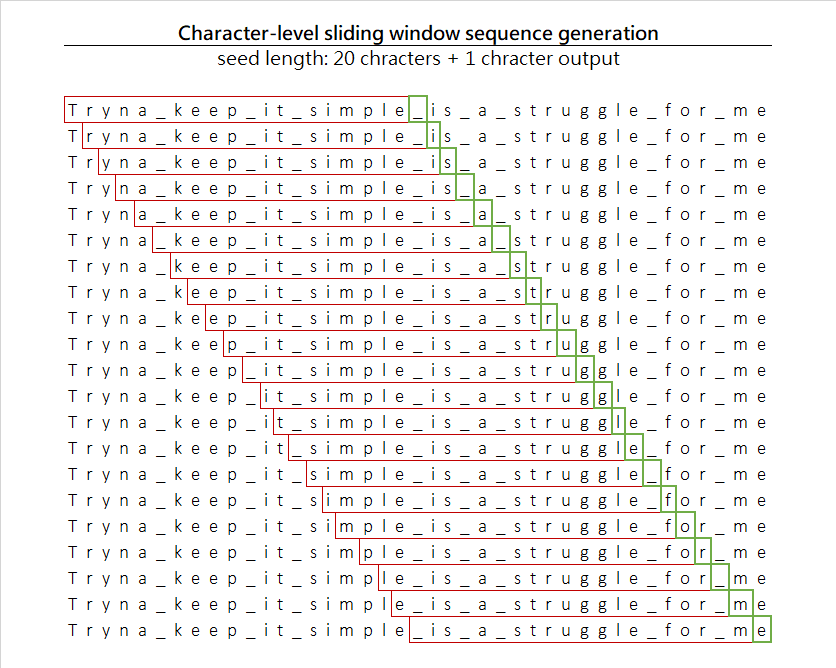

Create training sequences — We are going to use an idea of a sliding window and create a set of training examples by sliding a window of fixed size over a sentence. Here is a nice way to visualize this:

By moving one character at a time, we are generating inputs of the length of 20 characters and a single output character. In addition, as a bonus, since we are moving one character at a time, we are actually significantly expanding the size of our dataset.

4. Label Encode training sequences —Finally, since we don’t want the model to be working with raw characters (though it’s possible in theory because a character is technically just a number, so you could almost say that ASCII encoded all of the characters for us). We are going to associate a unique integer number with each character in our alphabet, something that you might have heard of as Label Encoding. This is also the time when we create two very important mappings character-to-index and index-to-character . With these two mappings, we can always encode any of the characters into its unique integer and also decode the output of the model from an index back to its original character.

5. One-Hot-Encode the dataset — Since we are working with categorical data, where all characters fall under some kind of category, we are going to have to encode out input columns. Here is a great description of what One-Hot-Encoding actually does by Rakshith Vasudev.

Once we are done with these five steps, we are pretty much done, now all we have to do is build the model and train it. Here is the code of the previous five steps, if you want to dive deeper into details.

# load all songs

songs = pd.read_csv('data/drake-songs.csv')

# merge all the lyrics together into one huge string

for index, row in songs['lyrics'].iteritems():

text = text + str(row).lower()

# find all the unique chracters

chars = sorted(list(set(text)))

print('total chars:', len(chars))

# create a dictionary mapping chracter-to-index

char_indices = dict((c, i) for i, c in enumerate(chars))

# create a dictionary mapping index-to-chracter

indices_char = dict((i, c) for i, c in enumerate(chars))

# cut the text into sequences

maxlen = 20

step = 1 # step size at every iteration

sentences = [] # list of sequences

next_chars = [] # list of next chracters that our model should predict

# iterate over text and save sequences into lists

for i in range(0, len(text) - maxlen, step):

sentences.append(text[i: i + maxlen])

next_chars.append(text[i + maxlen])

# create empty matrices for input and output sets

x = np.zeros((len(sentences), maxlen, len(chars)), dtype=np.bool)

y = np.zeros((len(sentences), len(chars)), dtype=np.bool)

# iterate over the matrices and convert all characters to numbers

# basically Label Encoding process and One Hot vectorization

for i, sentence in enumerate(sentences):

for t, char in enumerate(sentence):

x[i, t, char_indices[char]] = 1

y[i, char_indices[next_chars[i]]] = 1

4. Defining the model

Usually, you see networks that look like a web and converge from many nodes to a single output. Something like this:

Here we have a single point of input and a single output. This works great for inputs that are not consecutive, where the order of inputs does not affect the output. But in our case, the order of characters is actually very important because the specific order of characters is what creates unique words.

RNNs tackle this issue by creating a network that takes in consecutive inputs and also uses the activation from the previous node as a parameter for the next one.

Remember our case of a sequence Tryna_keep_it_simple and we extracted that the next character after that should be _ . This is exactly what we want our network to do. We are going to input sequences of characters where each character goes into T — > s<1>, r -> x<2>, n -> x<3>... e-> x<n> and the network predicts an output y -> _ , which is a space and is our next character.

LSTM refresher

Simple RNNs have one problem to them, they are not very good at passing information from very early cells to the later ones. For example, if you are looking at a sentence Tryna keep it simple is a struggle for me predicting that last word me (could be literally anyone or anything like Baka, cat, potato) It is very difficult if you can’t look back and see what other words appeared before.

LSTMs solve this problem by adding a little memory to every cell that stores some information about what happened before (what words appeared previously), and that’s why LSTMs look like this:

As well as passing the a<n> activation, you are also passing the c<n> which contains information on what happened in previous nodes. That’s why LSTMs are better at preserving the context and can generally make better predictions for purposes like Language Modeling.

5. Building the Model

I learned a bit of Keras before, so I used it as the framework to build the network, but in reality, this can be done by hand, the only difference is that it will take a lot longer.

# create sequential network, because we are passing activations

# down the network

model = Sequential()

# add LSTM layer

model.add(LSTM(128, input_shape=(maxlen, len(chars))))

# add Softmax layer to output one character

model.add(Dense(len(chars)))

model.add(Activation('softmax'))

# compile the model and pick the loss and optimizer

model.compile(loss='categorical_crossentropy', optimizer=RMSprop(lr=0.01))

# train the model

model.fit(x, y, batch_size=128, epochs=30)

As you can see, we are using an LSTM model and we are also using batching, which means that we training on subsets of data instead of all of it at once to slightly speed up the training process.

6. Generating Lyrics

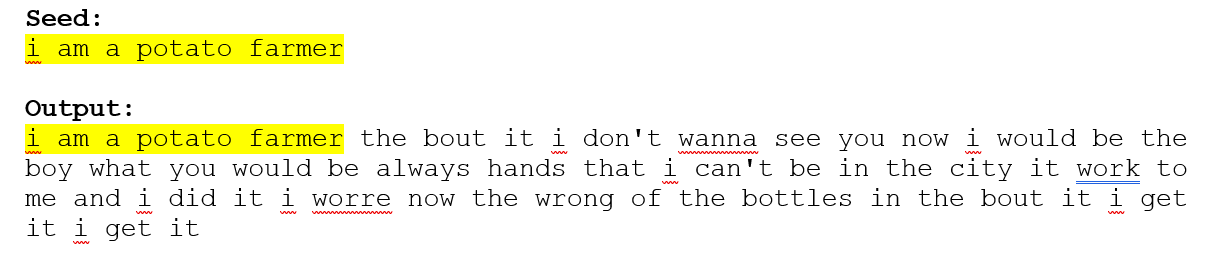

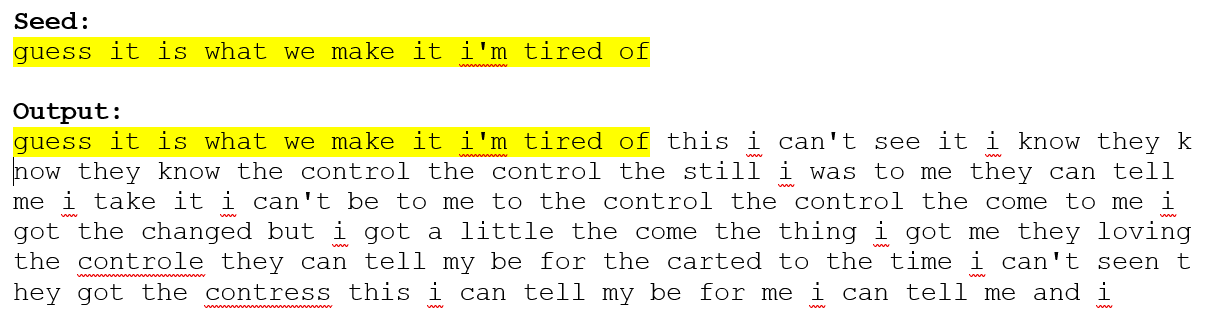

After our network is trained, here is how we are going to look for the next character. We are going to get some random seed, which is going to be a simple string input by a user. Then we are going to use the seed as an input to the network to predict the next character, and we will repeat this process until we have generated a bunch of new lines; similar to Figure 2 shown above.

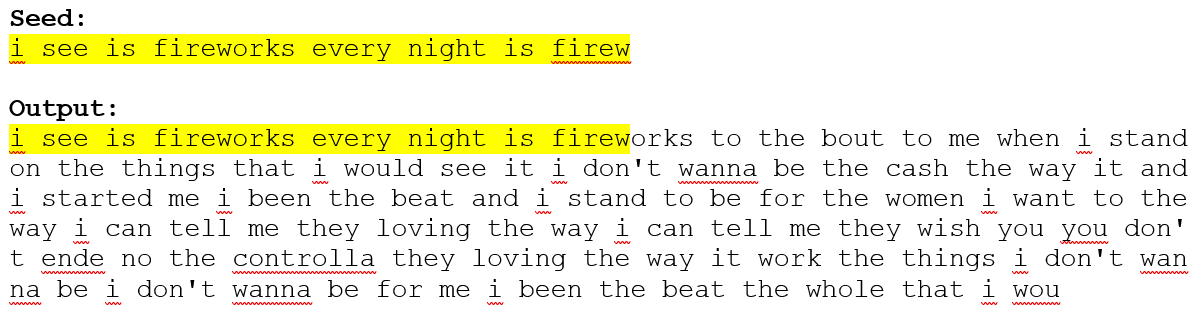

Below are some examples of generated lyrics

Note: the lyrics are not censored, so view at your own discretion

You might have noticed that words sometimes make no sense and that is a very common problem with Character-level models, due to the fact that input data is often sliced in the middle of the word, which makes the network learn and generate weird new words that somehow make sense to it.

This is the issue that gets addressed with the Word-level models, but for less than 200 lines of code, Character-level models are still very impressive.

7. Applications

Ideas described in this Character-level network can be expanded to many other applications that are far more useful than a lyric generation.

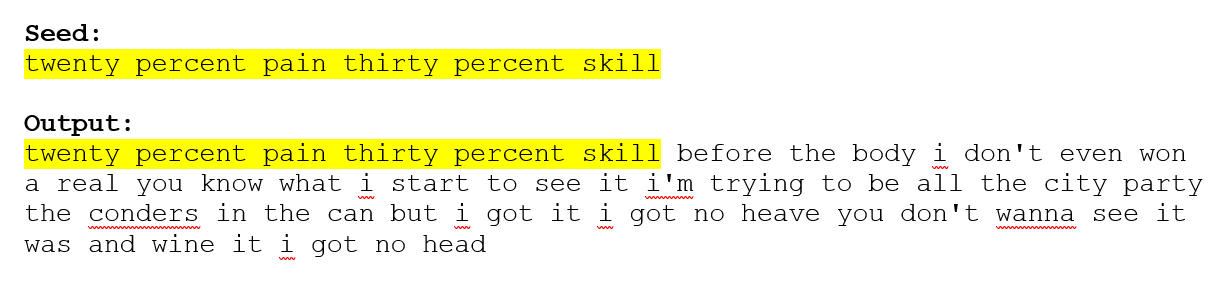

For example, the next word recommendations on your iPhone keyboard work the same way.

Imagine if you build an accurate enough Python Language Model, you could autocomplete not only keywords or variable names but also large chunks of code, saving programmers tons of time.

You might have noticed that the code is not complete here and some of the pieces are missing, here is the link to my Github repo, where you can dive much deeper into the details of building a similar project yourself.

Credits to Keras’ example of from Github

8. Closing Notes

In all, I hope you enjoyed the blog post 👍. Note I’ve originally posted this content a few years ago on Medium.

I’m currently on the verge of graduating from university and always looking for new opportunities. Feel free to reach out if you are working on something exciting, my email is nikolaevra@gmail.com

Really great post. This was a very interesting use case for LSTM. I have mostly worked with predictions based on Time-Series Data when it comes to LSTM. It was a fun read.

I'm glad you liked it!

You could save Drake a lot of money on ghostwriters with this.

Really nice post, Ruslan. Exemplary Python code. Also interesting to see its potential application with keyboard suggestions and code completion. I've seen kite.com are working on the latter.

If you're still looking for opportunities it might be worth looking at Kite's careers page or you can also check out the Able jobs section at able.bio/jobs.

haha yes, no more ghostwriters for Drake

Thank you for the careers pointers, I will take a look!

Not so long ago I was looking for a quality and reliable site where I could play my favourite slot machines in Australia, and I found such a site - sky crown cаsino online login - https://sky-crown.casinologinaustralia.com/. I recommend you to try this site, because you can play interesting gambling games on it!